Why Recent AI Developments Are Paving the Way for Real-World Applications

In recent times, many have noticed a lull in groundbreaking AI announcements, especially when compared to the monumental releases of GPT-3 in 2020 or GPT-4 in 2023. The anticipation for GPT-5 has reached such a fever pitch that it could easily inspire a Netflix series. Conversations are buzzing with the idea that AI might be hitting a plateau, sitting atop the Gartner hype cycle. This has fueled speculation that in the next few years, enthusiasm will wane, the investment bubble might burst, and we’ll return to our everyday lives, dismissing this as just another fleeting dream—or nightmare, depending on whom you ask.

But here’s the thing: more significant advancements have occurred in the past year than most people realize.

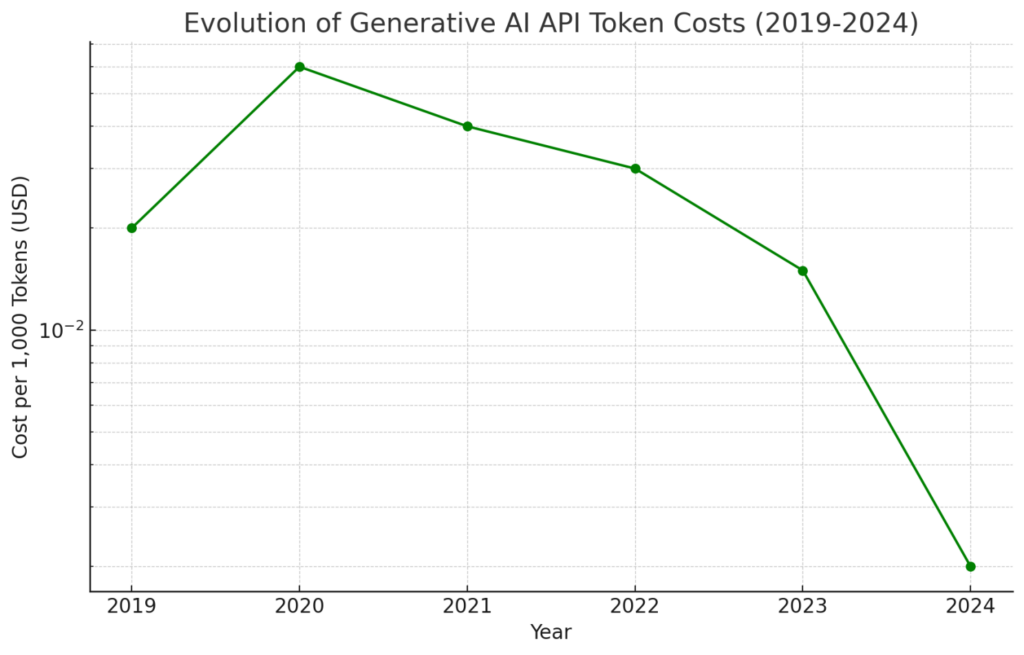

First and foremost, the cost of running generative AI has plummeted—by a factor of ten in just a year. This is largely because large models are becoming smaller and better optimized, even as their ability to analyze and respond continues to improve. Hardware advancements, too, are playing a crucial role, with companies constantly updating the technology used to train and execute these models. NVidia’s rise to become one of the most valuable companies globally is a testament to this, but let’s not overlook niche disruptors like Grok. This ongoing evolution is crucial because it paves the way for using AI in applications where cost and processing speed are paramount.

The quality of AI has also surged forward: better knowledge bases, multilingual capabilities, and fewer hallucinations. The data used to train these models has been meticulously optimized and cleaned, thanks to previous AI generations. We’re now generating and refining billions of data points to train new models, a task so massive that only AI can handle it. Today’s models are more reliable, function across multiple languages and countries, and deliver faster, more accurate responses.

Another exciting development is the rise of multi-modal models. These models don’t just understand text; they can now interpret images, videos, sounds, and even voice inputs. This broadens the scope of potential use cases dramatically and sets the stage for future models to be trained on far more than just text, as was the norm before.

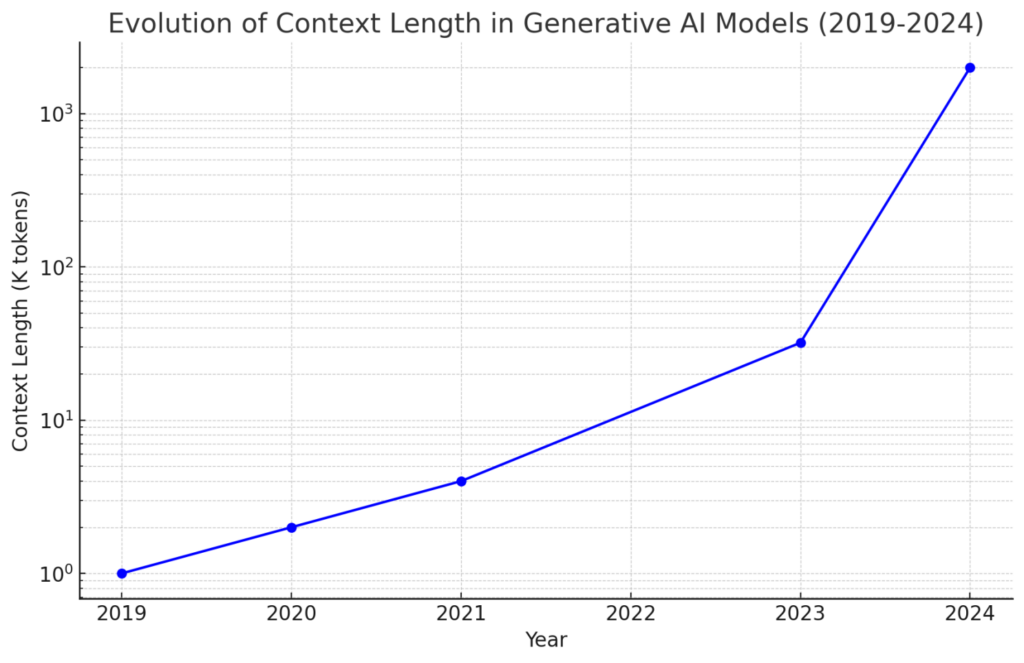

Generative AI has also expanded its capacity to process data as part of a single query or prompt. Just a year ago, 32K tokens were the standard, enough to cover a sizable chunk of text. But now, models like Google Gemini can analyze up to 2 million tokens in one go—we’re talking about processing an entire movie or a library’s worth of data in a single prompt. This leap in context length was necessary to accommodate the evolution of multi-modal capabilities.

A token is a small piece of text, like a word or part of a word, that AI models use to understand and process language. It can be extended for images or audio as a small part of the data.

Moreover, context caching has emerged as a game-changer. With the ability to process vast amounts of data without requiring specific training or external data searches (like Retrieval-Augmented Generation or RAG), the challenge was managing the time and cost associated with processing such extensive contexts. The solution? Caching. Once a model analyzes a large dataset, it’s saved in memory to facilitate multiple queries using the same context. This innovation saves processing power, speeds up responses, reduces costs, and makes multi-modal and large-context evolutions more practical for real-world use.

As impressive as AI’s human-like interaction abilities are, the real magic happens when these models integrate into the digital world—as part of SaaS applications or digital factories, for instance. For this, we need models that understand structured information and can interact seamlessly with computer interfaces (APIs). Enter “function calling,” where models are given context about the types of services they can access and are then able to utilize those services. The obvious application here is web or intranet searches, but it goes much further. These models can now interact with nearly any computer or cloud-based application, unlocking endless new possibilities.

The evolution of reasoning and coding abilities in AI is another critical milestone. While generating a poem is fun, it doesn’t have much commercial value. However, a model that can understand a problem, process requirement specifications, plan and execute actions, and even generate code on the fly to solve issues is a game-changer for businesses.

I could go on—about the rise of excellent open-source models from Meta, Mistral, Microsoft, and others, or about specialized models that excel at specific tasks, or even about smaller models that can now run on the latest smartphones equipped with dedicated neural network hardware. But the crux of my argument is this: the changes we’ve seen in the past year are as crucial for the adoption of AI technology as any flashy new breakthrough. These developments indicate that AI is maturing and is now ready to be integrated into business applications. It will take time for companies to fully grasp the potential of this technology and re-engineer their applications to harness it, but the time for real change—beyond mere hype or buzz—is now.

And while we’re busy implementing this incredible technology in our applications, which already has the potential to disrupt much of our professional lives, rest assured that labs and companies are hard at work on the next generation. These advancements may bring new excitement to the world, perhaps even ushering in AGI (Artificial General Intelligence) or superintelligence. But there’s no need to be impatient. The revolution is already here.

Leave a Reply